Publishers at a Threshold

AI Traffic Is Not All Bad News

Over the past several months, in conversations with publishers, ad ops teams, and platform partners, a familiar concern keeps surfacing. Traffic patterns are shifting. Bots are rendering pages more convincingly. Ads are firing in sessions that never convert. Buyers are asking harder questions about quality. CPMs feel more fragile.

It’s not surprising that much of this gets grouped under a single heading: AI traffic.

Many predicted that AI systems would disrupt distribution, but what we’re seeing now is something more specific. The issue isn’t simply that automated systems are accessing content. The issue is that our monetization infrastructure was built for a web where most traffic was human, and most uncertainty was rare.

That assumption no longer holds.

Automation on the web is not new. Crawlers, monitoring systems, preview tools, QA environments - these have always been part of the ecosystem. What has changed is both the sophistication and the economic surface area of automation. AI agents now render full pages, execute scripts, and interact with content in ways that look increasingly similar to human browsing. When they pass through ad stacks, impressions are generated. When those impressions do not lead to performance outcomes, buyers respond the only way markets know how: by pricing in uncertainty.

The buy side is already sophisticated about invalid traffic, filtering clear fraud and pricing impressions individually based on predicted outcomes. At the same time, buyers - from performance marketers to brand advertisers - still evaluate inventory at the domain or placement level, whether through learning models or allocation decisions. When signals are blended, outcomes are blended. That makes it increasingly paramount for publishers to apply smarter pre-auction routing, separating high-confidence human attention before it mixes with everything else.

There’s a parallel observation on the publisher side. Most yield calculations still divide revenue by total impressions served. If those impressions include a mix of high-confidence human attention plus automation that was never likely to perform, the resulting net CPM reflects a blended denominator. That can obscure what human inventory is actually worth. As traffic composition changes, clearer actor segmentation doesn’t just help buyers price more precisely - it helps publishers understand their own economics more clearly as well.

This traffic is not necessarily fraud. It is, more precisely, non-performing traffic entering premium monetization paths.

And that is a classification problem.

Two Immediate Harms - Both Addressable

In our discussions, two patterns come up repeatedly. Neither is abstract or hand wavy. Both are solvable with better infrastructure.

Agentic Access Without Structure

AI agents are increasingly accessing content directly - sometimes for indexing, sometimes for summarization, sometimes to answer user queries in real time. Some of this activity is constructive. Some of it may be extractive. Most of it is simply opaque.

For publishers, the available choices often feel binary: block aggressively and risk losing legitimate or future value, or allow broadly and accept that usage may be unmeasured and uncompensated.

Neither posture is especially satisfying.

A more mature approach begins with visibility. If automated access can be measured, classified, and understood in terms of actor type and intent, then policy becomes deliberate rather than reactive. Some agents may be blocked. Others may be rate-limited. Others still may be licensed or billed. At minimum, the activity is no longer invisible.

The point is not to assume that all automation is hostile. It is to acknowledge that automation is heterogeneous, and should be treated accordingly.

Automation in the Auction

The second issue is closer to home for revenue teams. When automated traffic renders ads but does not produce meaningful engagement or conversion, it affects how inventory is perceived. Buyers do not typically have the granularity to parse every source of uncertainty. They respond to performance signals.

If uncertainty increases, bids adjust downward.

Many teams we speak with are reluctant to simply block ambiguous traffic, and for good reason. False positives are costly. Traffic that is uncertain is not necessarily invalid. At the same time, allowing all traffic to enter premium demand paths can erode trust over time.

This is where classification becomes economically meaningful. When impressions can be tagged server-side with a validated actor type and a confidence tier before they enter the auction, publishers gain optionality. High-confidence human traffic can be routed to premium demand. Lower-confidence or automated traffic can be downgraded, redirected, or monetized differently.

The framing shifts from “block or allow” to “how should this participate?”

Block when certain. Route when uncertain.

That simple shift can protect yield without discarding value.

A Broader Way of Thinking About Actors

There is a temptation in the current moment to treat the debate as moral or existential: humans versus bots, creators versus AI. That framing is understandable, but it may not be the most productive.

AI agents are not going away. They are increasingly acting on behalf of users - researching, summarizing, recommending, and soon transacting. In many cases, they are intermediaries rather than adversaries.

If we step back, every request to a publisher’s site has three characteristics:

An actor.

An intent.

An economic implication.

The infrastructure of the web has historically treated most actors as interchangeable until proven otherwise. That worked when ambiguity was low. It works less well now.

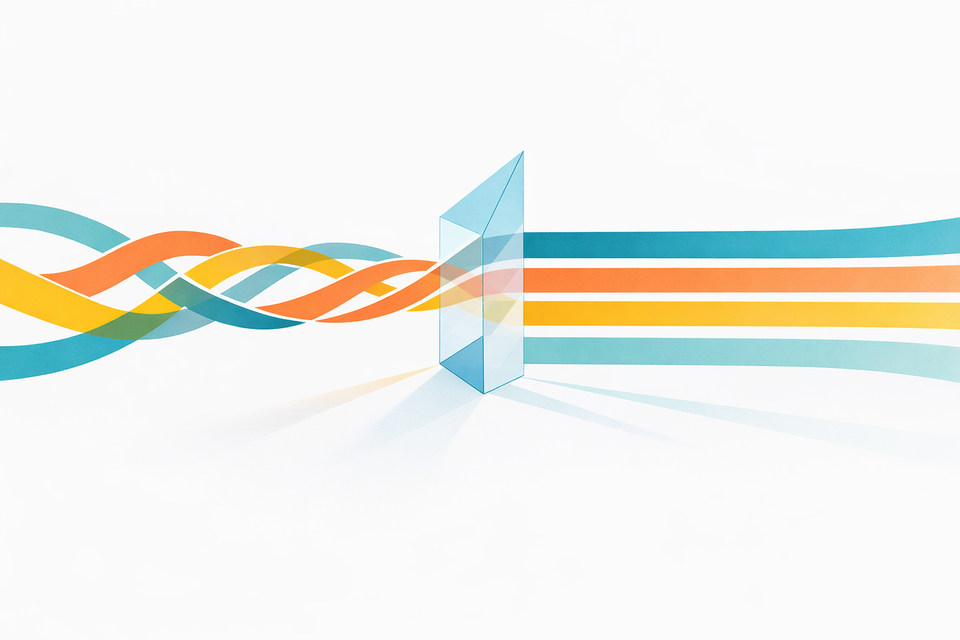

A more durable approach is to treat content access as a threshold. Actors approach - human readers, AI agents, monitoring systems, crawlers. The publisher defines how each class of actor crosses that threshold. Some subscribe. Some license. Some are rate-limited. Some are blocked. Some are routed to different monetization paths.

This is less about defending against AI and more about acknowledging that the web now includes multiple classes of participants. The goal is not to eliminate automation, but to align it economically.

AI traffic is not all bad news.

When we hear that “AI traffic is hurting publishers,” the instinct is to view automation as the problem itself. But traffic, in isolation, is neutral. The harm arises when we cannot distinguish, classify, and price it appropriately.

If we treat all automated traffic as malicious, we risk closing off future revenue models and productive relationships. If we treat all automated traffic as equivalent to human attention, we risk continued yield erosion.

The opportunity lies in actor-aware economics - in systems that can discern who or what is requesting access, how confident we are in that classification, and how that traffic should be monetized.

We are at a threshold. The web is no longer composed of a single class of visitor, and monetization models built for a mostly human audience are straining under that assumption. Publishers that adapt - by classifying actors, routing traffic intentionally, and aligning policy with economics - can cross into a more holistic model of monetization, one that treats human and AI traffic according to their distinct value and opportunity.

The shift is already underway. The advantage will accrue to those who treat it as infrastructure, not as noise.

Learn more at https://paywalls.net/vai

Member discussion